There’s something I’ve been really excited about the past little while, and some may not understand why.

It’s the new Category5 Transcoders.

Transcoding is the direct analog-to-analog or digital-to-digital conversion of one encoding to another, such as for movie data files or audio files. This is usually done in cases where a target device (or workflow) does not support the format or has limited storage capacity that mandates a reduced file size,[1] or to convert incompatible or obsolete data to a better-supported or modern format. [Wikipedia]

Here is what I wanted to achieve in building a custom transcoding platform for Category5:

- Become HTML5 video compliant.

- Provide screaming fast file delivery via RSS or direct download.

- Provide instant video loading in browser embeds, with instant playback when seeking to specific points in the timeline.

- Provide Flash fallback for users with terrible, terrible systems.

- Ensure our show is accessible across all devices, all platforms, and in all nations.

- Make back-episodes available, even ones which are no longer available through any other means.

- Reduce the file size of each version of each episode in order to keep costs down for us as well as improve performance for our viewers.

- Ensure our video may be distributed by web site embeds, popup windows, RSS feed aggregators, iTunes, Miro Internet TV, Roku, and more.

- Ensure our videos are compatible with current monetization platforms such as Google AdSense for Video.

In the past, we’ve been limited to third-party services from Blip and YouTube. Both of these services are huge parts of what we do, but relying on them exclusively has had some issues:

- Both Blip and YouTube services are blocked in Mainland China, meaning our viewers there have trouble tuning in.

- Both services, in their default state, require manually labour in order to place episodes online in a clean way (eg., including appropriate title, description and playlist integration).

- Blip does not monetize well.

- YouTube monetizes well on their site, but they restrict advertising on embeds (so if people watch the show through our site rather than directly on YouTube, we don’t get paid).

The process of transcoding the files and making them available to our viewers has been a onerous task since the get-go. We grew so quickly during Season 1 that we didn’t really have the infrastructure to provide the massive amount of video that was to go out each month. We had one month in 2012 for example, where we served nearly 125 Terribytes of video.

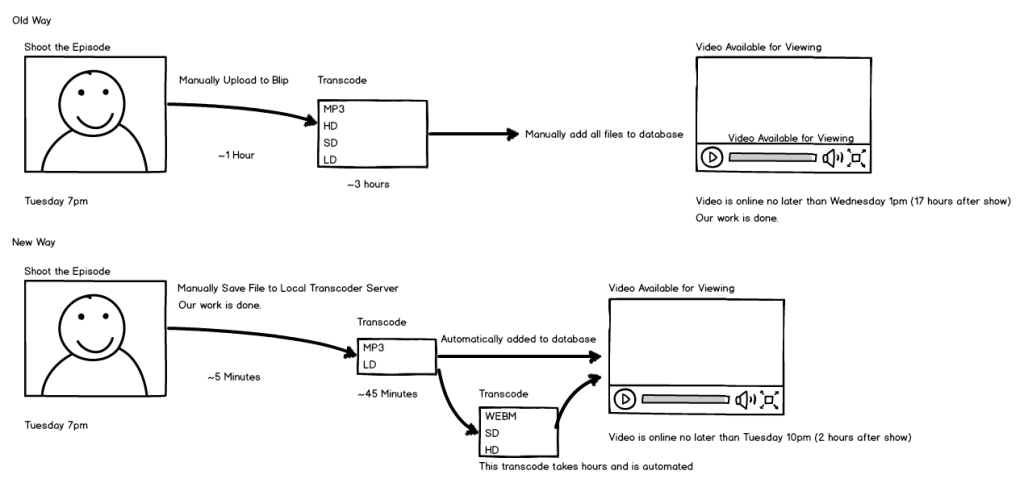

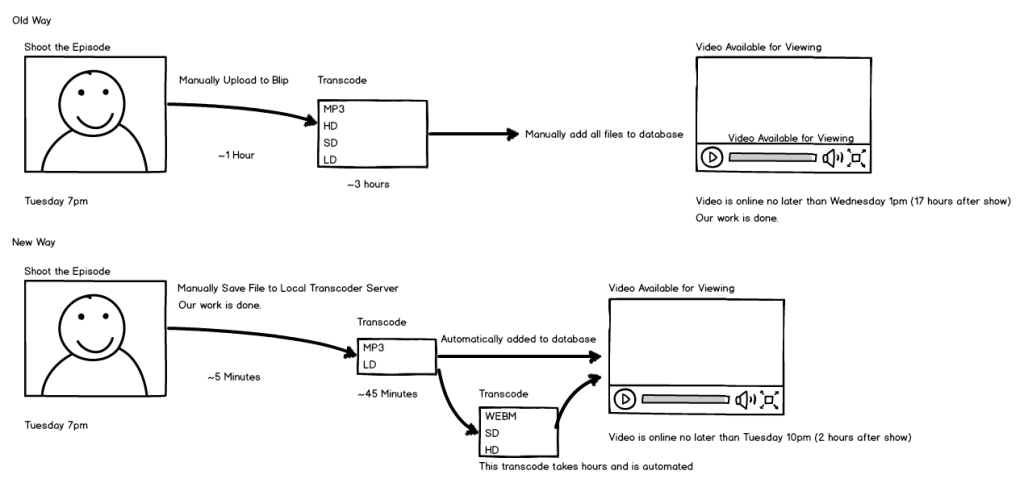

It takes me many hours each week just to make the files available to our viewers, and the new transcoder has been developed to cut that task down to only a few minutes, while simultaneously pumping out the video much, much faster.

I’ll try to explain how this happens in a mockup:

The new transcoder not only does things faster: it does things simultaneously.

The new transcoder not only does things faster: it does things simultaneously.

While transcoding the files for the RSS feeds, it has already placed a web-embedded copy of the show on our web site, in as little as 45 minutes. Not only that, but once it’s all said and done, the transcoder server then automatically uploads the file to Blip.

The new transcoder consists of two servers at two different locations sharing the task itself, and then the files are distributed through two of our CDNs (one which is powered by Amazon, the other is our own affordable solution based on the old “alt” feed model).

We have been working with the team at Flowplayer, who are soon to introduce a public transcoding and hosting / distribution service for content providers. With this new relationship, we will be able to serve up ads in a friendly way to help offset distribution costs. This also means we now have our own embed player, no longer relying on YouTube or Blip’s embedded player.

This means, viewers in Mainland China can now watch Category5 directly through our main web site. No more workarounds!

As long as we can offset the added expense of self-hosting video, this could lead to some great things. I’ll be keeping an eye on it over the next while, and encourage you to submit your feedback. I love the idea of Category5 finally being accessible to everyone, everywhere, and very quickly following each show. I also love that my Tuesday nights will no longer be so arduously long.

Transcoders are a very difficult thing to explain, and the way we’re doing it is hard to explain, but to me, it’s exciting. Just know that it means “everything is better than ever”, with fast video load time through our site, RSS feeds that are more than 10x faster than before, global access (even in Mainland China), and room to grow.

I’m currently running the system through countless tests, but the transcoders are live. It will be working its way (automatically) through back-episodes, so you’ll start to see the YouTube player disappearing from the site, replaced with our own player. Eventually, all 312+ episodes will be available.

Thanks for growing with us!

– Robbie