If you haven’t read part 1 yet, make sure you start there.

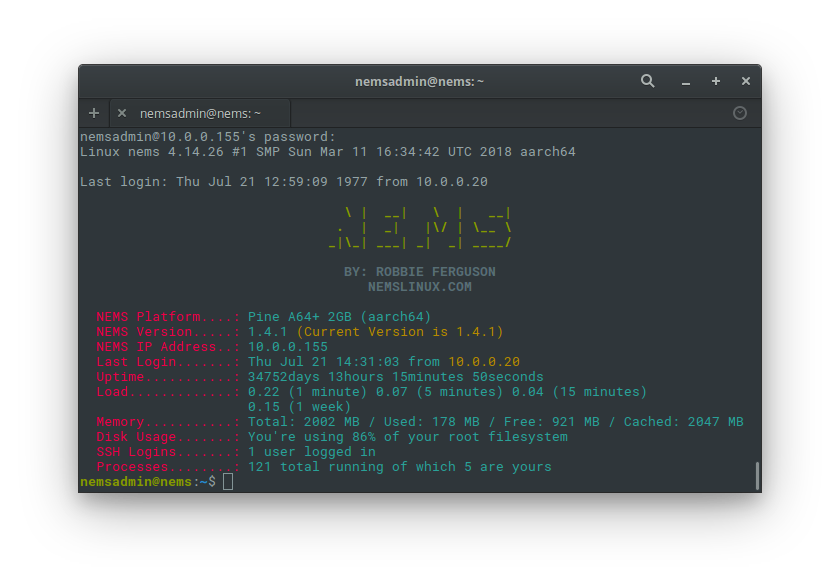

Okay — I can’t wait 24 hours — I’m an eager beaver. But 14 hours later, the system is still showing the correct time. Let’s play.

root@nems:/usr/local/share/nems/nems-scripts# apt update && apt upgrade Ign:1 http://download.webmin.com/download/repository sarge InRelease Ign:2 http://deb.debian.org/debian stretch InRelease Hit:3 http://ftp.debian.org/debian stretch-backports InRelease Hit:4 http://deb.debian.org/debian stretch Release Hit:5 http://download.webmin.com/download/repository sarge Release Ign:6 http://webmin.mirror.somersettechsolutions.co.uk/repository sarge InRelease Hit:7 http://webmin.mirror.somersettechsolutions.co.uk/repository sarge Release Hit:8 https://repo.foxiehost.eu/debian stretch InRelease Ign:9 https://apt.izzysoft.de/ubuntu generic InRelease Hit:10 https://apt.izzysoft.de/ubuntu generic Release Reading package lists... Done Building dependency tree Reading state information... Done All packages are up to date. Reading package lists... Done Building dependency tree Reading state information... Done Calculating upgrade... Done 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. root@nems:/usr/local/share/nems/nems-scripts# apt dist-upgrade Reading package lists... Done Building dependency tree Reading state information... Done Calculating upgrade... Done 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded.

Okay – so I’m on the absolute most amazingly modern kernel ever. Great! So let’s fix that kernel.

We’ll check again to see if the timer workaround is implemented in our kernel:

root@nems:/usr/local/share/nems/nems-scripts# grep CONFIG_FSL_ERRATUM_A008585 /boot/config-4.14.26 # CONFIG_FSL_ERRATUM_A008585 is not set

So what does this tell us?

CONFIG_FSL_ERRATUM_A008585 shows whether the workaround for Freescale/NXP Erratum A-008585 is active. The workaround’s description is “This option enables a workaround for Freescale/NXP Erratum A-008585 (“ARM generic timer may contain an erroneous value”). The workaround will only be active if the fsl,erratum-a008585 property is found in the timer node.”

For those who are even nerdier than me, check out kernel.org’s log of the patch here: https://patchwork.kernel.org/patch/9487241/

You’re such a nerd!

Now I’m getting into the experimental. I’ve never changed a kernel config before. Funny, that. I guess if you’ve never needed to do something, you never learn to do it.

I have yet to find online instructions for doing this, and here’s what I’ll try.

Note for tl;dr – this didn’t work.

Alright, let’s change my default config as we prepare to re-compile the kernel. I’m unsure if changing this config will make the newly compiled kernel receive the change or not, but it’s worth trying.

root@nems:/tmp# sed -i "s/# CONFIG_FSL_ERRATUM_A008585.*/CONFIG_FSL_ERRATUM_A008585=y/g" /boot/config-4.14.26 root@nems:/tmp# grep CONFIG_FSL_ERRATUM_A008585 /boot/config-4.14.26 CONFIG_FSL_ERRATUM_A008585=y

Great, my config is now set to include this patch.

Okay, I’m obviously gonna need the kernel headers here…

root@nems:/tmp/tmp# apt install linux-headers-$(uname -r) Reading package lists... Done Building dependency tree Reading state information... Done The following NEW packages will be installed: linux-headers-4.14.26 0 upgraded, 1 newly installed, 0 to remove and 0 not upgraded. Need to get 10.6 MB of archives. After this operation, 73.6 MB of additional disk space will be used. Get:1 https://repo.foxiehost.eu/debian stretch/main arm64 linux-headers-4.14.26 arm64 4.14.26-1 [10.6 MB] Fetched 10.6 MB in 4s (2,265 kB/s) Selecting previously unselected package linux-headers-4.14.26. (Reading database ... 83271 files and directories currently installed.) Preparing to unpack .../linux-headers-4.14.26_4.14.26-1_arm64.deb ... Unpacking linux-headers-4.14.26 (4.14.26-1) ... Setting up linux-headers-4.14.26 (4.14.26-1) ... root@nems:/tmp/tmp# cd /usr/src/linux-headers-4.14.26/ root@nems:/usr/src/linux-headers-4.14.26# ls arch block certs crypto Documentation drivers firmware fs include init ipc Kconfig kernel lib Makefile mm Module.symvers net samples scripts security sound tools usr virt

Looks good, and the default setting is the same as my running kernel config:

root@nems:~# cd /usr/src/linux-headers-4.14.26 root@nems:/usr/src/linux-headers-4.14.26# grep CONFIG_FSL_ERRATUM_A008585 /usr/src/linux-headers-4.14.26/.config # CONFIG_FSL_ERRATUM_A008585 is not set

Great! I think I’ve made a connection… this file has the same setting as my default kernel config.

So since I’ve already updated my own config, let’s compile with oldconfig – I’m assuming that means “grab the old config”… hmmm…. makes sense to me.

root@nems:/usr/src/linux-headers-4.14.26# grep CONFIG_FSL_ERRATUM_A008585 .config # CONFIG_FSL_ERRATUM_A008585 is not set root@nems:/usr/src/linux-headers-4.14.26# make oldconfig HOSTCC scripts/basic/fixdep HOSTCC scripts/basic/bin2c HOSTLD scripts/kconfig/conf scripts/kconfig/conf --oldconfig Kconfig # # configuration written to .config #

Alright, is it set now?

root@nems:/usr/src/linux-headers-4.14.26# grep CONFIG_FSL_ERRATUM_A008585 .config root@nems:/usr/src/linux-headers-4.14.26#

Weird… the setting is gone! Well, let’s see what happens? Maybe the kernel will default to “yes” if the setting … let’s try.

root@nems:/usr/src/linux-headers-4.14.26# make config scripts/kconfig/conf --oldaskconfig Kconfig * * Linux/arm64 4.14.26 Kernel Configuration * * * General setup * Cross-compiler tool prefix (CROSS_COMPILE) [] (NEW) ^C scripts/kconfig/Makefile:32: recipe for target 'config' failed make[1]: *** [config] Interrupt Makefile:541: recipe for target 'config' failed make: *** [config] Interrupt

Oh – If it’s going to ask me to say yes to a crapload of defaults, I’ma abort that!

I’ll trust the kernel devs and my config 😛

root@nems:/usr/src/linux-headers-4.14.26# yes "" | make config

scripts/kconfig/conf --oldaskconfig Kconfig

*

* Linux/arm64 4.14.26 Kernel Configuration

*

*

* General setup

*

Cross-compiler tool prefix (CROSS_COMPILE) [] (NEW)

Compile also drivers which will not load (COMPILE_TEST) [N/y/?] (NEW)

Local version - append to kernel release (LOCALVERSION) [] (NEW)

Automatically append version information to the version string (LOCALVERSION_AUTO) [Y/n/?] (NEW)

Default hostname (DEFAULT_HOSTNAME) [(none)] (NEW)

Support for paging of anonymous memory (swap) (SWAP) [Y/n/?] (NEW)

System V IPC (SYSVIPC) [N/y/?] (NEW)

Enable process_vm_readv/writev syscalls (CROSS_MEMORY_ATTACH) [Y/n/?] (NEW)

uselib syscall (USELIB) [N/y/?] (NEW)

*

* IRQ subsystem

*

*

* Timers subsystem

*

Timer tick handling

> 1. Periodic timer ticks (constant rate, no dynticks) (HZ_PERIODIC) (NEW)

2. Idle dynticks system (tickless idle) (NO_HZ_IDLE) (NEW)

3. Full dynticks system (tickless) (NO_HZ_FULL) (NEW)

choice[1-3]: Old Idle dynticks config (NO_HZ) [N/y/?] (NEW)

High Resolution Timer Support (HIGH_RES_TIMERS) [N/y/?] (NEW)

*

* CPU/Task time and stats accounting

*

Cputime accounting

> 1. Simple tick based cputime accounting (TICK_CPU_ACCOUNTING) (NEW)

2. Full dynticks CPU time accounting (VIRT_CPU_ACCOUNTING_GEN) (NEW)

choice[1-2]: Fine granularity task level IRQ time accounting (IRQ_TIME_ACCOUNTING) [N/y/?] (NEW)

BSD Process Accounting (BSD_PROCESS_ACCT) [N/y/?] (NEW)

*

* RCU Subsystem

*

Make expert-level adjustments to RCU configuration (RCU_EXPERT) [N/y/?] (NEW)

Kernel .config support (IKCONFIG) [N/y/?] (NEW)

Kernel log buffer size (16 => 64KB, 17 => 128KB) (LOG_BUF_SHIFT) [17] (NEW)

CPU kernel log buffer size contribution (13 => 8 KB, 17 => 128KB) (LOG_CPU_MAX_BUF_SHIFT) [12] (NEW)

Temporary per-CPU printk log buffer size (12 => 4KB, 13 => 8KB) (PRINTK_SAFE_LOG_BUF_SHIFT) [13] (NEW)

*

* Control Group support

*

Control Group support (CGROUPS) [N/y/?] (NEW)

*

* Namespaces support

*

Namespaces support (NAMESPACES) [Y/?] y

UTS namespace (UTS_NS) [Y/n/?] (NEW)

User namespace (USER_NS) [N/y/?] (NEW)

PID Namespaces (PID_NS) [Y/n/?] (NEW)

Automatic process group scheduling (SCHED_AUTOGROUP) [N/y/?] (NEW)

Enable deprecated sysfs features to support old userspace tools (SYSFS_DEPRECATED) [N/y/?] (NEW)

Kernel->user space relay support (formerly relayfs) (RELAY) [N/y/?] (NEW)

Initial RAM filesystem and RAM disk (initramfs/initrd) support (BLK_DEV_INITRD) [N/y/?] (NEW)

Compiler optimization level

> 1. Optimize for performance (CC_OPTIMIZE_FOR_PERFORMANCE) (NEW)

2. Optimize for size (CC_OPTIMIZE_FOR_SIZE) (NEW)

choice[1-2]: *

* Configure standard kernel features (expert users)

*

Configure standard kernel features (expert users) (EXPERT) [N/y/?] (NEW)

Enable bpf() system call (BPF_SYSCALL) [N/y/?] (NEW)

Enable userfaultfd() system call (USERFAULTFD) [N/y/?] (NEW)

Embedded system (EMBEDDED) [N/y/?] (NEW)

PC/104 support (PC104) [N/y/?] (NEW)

*

* Kernel Performance Events And Counters

*

Kernel performance events and counters (PERF_EVENTS) [N/y/?] (NEW)

Disable heap randomization (COMPAT_BRK) [Y/n/?] (NEW)

Choose SLAB allocator

1. SLAB (SLAB) (NEW)

> 2. SLUB (Unqueued Allocator) (SLUB) (NEW)

choice[1-2?]: Allow slab caches to be merged (SLAB_MERGE_DEFAULT) [Y/n/?] (NEW)

SLAB freelist randomization (SLAB_FREELIST_RANDOM) [N/y/?] (NEW)

Harden slab freelist metadata (SLAB_FREELIST_HARDENED) [N/y/?] (NEW)

SLUB per cpu partial cache (SLUB_CPU_PARTIAL) [Y/n/?] (NEW)

Profiling support (PROFILING) [N/y/?] (NEW)

Optimize very unlikely/likely branches (JUMP_LABEL) [N/y/?] (NEW)

*

* GCC plugins

*

GCC plugins (GCC_PLUGINS) [N/y/?] (NEW)

Stack Protector buffer overflow detection

> 1. None (CC_STACKPROTECTOR_NONE) (NEW)

2. Regular (CC_STACKPROTECTOR_REGULAR) (NEW)

3. Strong (CC_STACKPROTECTOR_STRONG) (NEW)

choice[1-3?]: Use a virtually-mapped stack (VMAP_STACK) [Y/n/?] (NEW)

Perform full reference count validation at the expense of speed (REFCOUNT_FULL) [N/y/?]

*

* GCOV-based kernel profiling

*

*

* Enable loadable module support

*

Enable loadable module support (MODULES) [N/y/?] (NEW)

*

* Enable the block layer

*

Enable the block layer (BLOCK) [Y/?] y

Block layer SG support v4 (BLK_DEV_BSG) [Y/n/?] (NEW)

Block layer SG support v4 helper lib (BLK_DEV_BSGLIB) [N/y/?] (NEW)

Block layer data integrity support (BLK_DEV_INTEGRITY) [N/y/?] (NEW)

Zoned block device support (BLK_DEV_ZONED) [N/y/?] (NEW)

Block device command line partition parser (BLK_CMDLINE_PARSER) [N/y/?] (NEW)

Enable support for block device writeback throttling (BLK_WBT) [N/y/?] (NEW)

Logic for interfacing with Opal enabled SEDs (BLK_SED_OPAL) [N/y/?] (NEW)

*

* Partition Types

*

Advanced partition selection (PARTITION_ADVANCED) [N/y/?] (NEW)

*

* IO Schedulers

*

Deadline I/O scheduler (IOSCHED_DEADLINE) [Y/n/?] (NEW)

CFQ I/O scheduler (IOSCHED_CFQ) [Y/n/?] (NEW)

Default I/O scheduler

1. Deadline (DEFAULT_DEADLINE) (NEW)

> 2. CFQ (DEFAULT_CFQ) (NEW)

3. No-op (DEFAULT_NOOP) (NEW)

choice[1-3?]: MQ deadline I/O scheduler (MQ_IOSCHED_DEADLINE) [Y/n/?] (NEW)

Kyber I/O scheduler (MQ_IOSCHED_KYBER) [Y/n/?] (NEW)

BFQ I/O scheduler (IOSCHED_BFQ) [N/y/?] (NEW)

*

* Platform selection

*

Actions Semi Platforms (ARCH_ACTIONS) [N/y/?] (NEW)

Allwinner sunxi 64-bit SoC Family (ARCH_SUNXI) [N/y/?] (NEW)

Annapurna Labs Alpine platform (ARCH_ALPINE) [N/y/?] (NEW)

Broadcom BCM2835 family (ARCH_BCM2835) [N/y/?] (NEW)

Broadcom iProc SoC Family (ARCH_BCM_IPROC) [N/y/?] (NEW)

Marvell Berlin SoC Family (ARCH_BERLIN) [N/y/?] (NEW)

Broadcom Set-Top-Box SoCs (ARCH_BRCMSTB) [N/y/?] (NEW)

ARMv8 based Samsung Exynos SoC family (ARCH_EXYNOS) [N/y/?] (NEW)

ARMv8 based Freescale Layerscape SoC family (ARCH_LAYERSCAPE) [N/y/?] (NEW)

LG Electronics LG1K SoC Family (ARCH_LG1K) [N/y/?] (NEW)

Hisilicon SoC Family (ARCH_HISI) [N/y/?] (NEW)

Mediatek MT65xx & MT81xx ARMv8 SoC (ARCH_MEDIATEK) [N/y/?] (NEW)

Amlogic Platforms (ARCH_MESON) [N/y/?] (NEW)

Marvell EBU SoC Family (ARCH_MVEBU) [N/y/?] (NEW)

Qualcomm Platforms (ARCH_QCOM) [N/y/?] (NEW)

Realtek Platforms (ARCH_REALTEK) [N/y/?] (NEW)

Rockchip Platforms (ARCH_ROCKCHIP) [N/y/?] (NEW)

AMD Seattle SoC Family (ARCH_SEATTLE) [N/y/?] (NEW)

Renesas SoC Platforms (ARCH_RENESAS) [N/y/?] (NEW)

Altera's Stratix 10 SoCFPGA Family (ARCH_STRATIX10) [N/y/?] (NEW)

NVIDIA Tegra SoC Family (ARCH_TEGRA) [N/y/?] (NEW)

Spreadtrum SoC platform (ARCH_SPRD) [N/y/?] (NEW)

Cavium Inc. Thunder SoC Family (ARCH_THUNDER) [N/y/?] (NEW)

Cavium ThunderX2 Server Processors (ARCH_THUNDER2) [N/y/?] (NEW)

Socionext UniPhier SoC Family (ARCH_UNIPHIER) [N/y/?] (NEW)

ARMv8 software model (Versatile Express) (ARCH_VEXPRESS) [N/y/?] (NEW)

AppliedMicro X-Gene SOC Family (ARCH_XGENE) [N/y/?] (NEW)

ZTE ZX SoC Family (ARCH_ZX) [N/y/?] (NEW)

Xilinx ZynqMP Family (ARCH_ZYNQMP) [N/y/?] (NEW)

*

* Bus support

*

PCI support (PCI) [N/y/?] (NEW)

*

* DesignWare PCI Core Support

*

*

* PCI Endpoint

*

PCI Endpoint Support (PCI_ENDPOINT) [N/y/?] (NEW)

*

* Kernel Features

*

*

* ARM errata workarounds via the alternatives framework

*

Cortex-A53: 826319: System might deadlock if a write cannot complete until read data is accepted (ARM64_ERRATUM_826319) [Y/n/?] (NEW)

Cortex-A53: 827319: Data cache clean instructions might cause overlapping transactions to the interconnect (ARM64_ERRATUM_827319) [Y/n/?] (NEW)

Cortex-A53: 824069: Cache line might not be marked as clean after a CleanShared snoop (ARM64_ERRATUM_824069) [Y/n/?] (NEW)

Cortex-A53: 819472: Store exclusive instructions might cause data corruption (ARM64_ERRATUM_819472) [Y/n/?] (NEW)

Cortex-A57: 832075: possible deadlock on mixing exclusive memory accesses with device loads (ARM64_ERRATUM_832075) [Y/n/?] (NEW)

Cortex-A53: 843419: A load or store might access an incorrect address (ARM64_ERRATUM_843419) [Y/n/?] (NEW)

Cavium erratum 22375, 24313 (CAVIUM_ERRATUM_22375) [Y/n/?] (NEW)

Cavium erratum 23154: Access to ICC_IAR1_EL1 is not sync'ed (CAVIUM_ERRATUM_23154) [Y/n/?] (NEW)

Cavium erratum 27456: Broadcast TLBI instructions may cause icache corruption (CAVIUM_ERRATUM_27456) [Y/n/?] (NEW)

Cavium erratum 30115: Guest may disable interrupts in host (CAVIUM_ERRATUM_30115) [Y/n/?] (NEW)

Falkor E1003: Incorrect translation due to ASID change (QCOM_FALKOR_ERRATUM_1003) [Y/n/?] (NEW)

Falkor E1009: Prematurely complete a DSB after a TLBI (QCOM_FALKOR_ERRATUM_1009) [Y/n/?] (NEW)

QDF2400 E0065: Incorrect GITS_TYPER.ITT_Entry_size (QCOM_QDF2400_ERRATUM_0065) [Y/n/?] (NEW)

Falkor E1041: Speculative instruction fetches might cause errant memory access (QCOM_FALKOR_ERRATUM_E1041) [Y/n/?] (NEW)

Page size

> 1. 4KB (ARM64_4K_PAGES) (NEW)

2. 16KB (ARM64_16K_PAGES) (NEW)

3. 64KB (ARM64_64K_PAGES) (NEW)

choice[1-3?]: Virtual address space size

> 1. 39-bit (ARM64_VA_BITS_39) (NEW)

2. 48-bit (ARM64_VA_BITS_48) (NEW)

choice[1-2?]: Build big-endian kernel (CPU_BIG_ENDIAN) [N/y/?] (NEW)

Multi-core scheduler support (SCHED_MC) [N/y/?] (NEW)

SMT scheduler support (SCHED_SMT) [N/y/?] (NEW)

Maximum number of CPUs (2-4096) (NR_CPUS) [64] (NEW)

Support for hot-pluggable CPUs (HOTPLUG_CPU) [Y/?] (NEW) y

Numa Memory Allocation and Scheduler Support (NUMA) [N/y/?] (NEW)

Preemption Model

> 1. No Forced Preemption (Server) (PREEMPT_NONE) (NEW)

2. Voluntary Kernel Preemption (Desktop) (PREEMPT_VOLUNTARY) (NEW)

3. Preemptible Kernel (Low-Latency Desktop) (PREEMPT) (NEW)

choice[1-3]: Timer frequency

1. 100 HZ (HZ_100) (NEW)

> 2. 250 HZ (HZ_250) (NEW)

3. 300 HZ (HZ_300) (NEW)

4. 1000 HZ (HZ_1000) (NEW)

choice[1-4?]: Memory model

> 1. Sparse Memory (SPARSEMEM_MANUAL) (NEW)

choice[1]: 1

Sparse Memory virtual memmap (SPARSEMEM_VMEMMAP) [Y/n/?] (NEW)

Allow for memory compaction (COMPACTION) [Y/n/?] (NEW)

Page migration (MIGRATION) [Y/?] (NEW) y

Enable bounce buffers (BOUNCE) [Y/n/?] (NEW)

Enable KSM for page merging (KSM) [N/y/?] (NEW)

Low address space to protect from user allocation (DEFAULT_MMAP_MIN_ADDR) [4096] (NEW)

Enable recovery from hardware memory errors (MEMORY_FAILURE) [N/y/?] (NEW)

Transparent Hugepage Support (TRANSPARENT_HUGEPAGE) [N/y/?] (NEW)

Enable cleancache driver to cache clean pages if tmem is present (CLEANCACHE) [N/y/?] (NEW)

Enable frontswap to cache swap pages if tmem is present (FRONTSWAP) [N/y/?] (NEW)

Contiguous Memory Allocator (CMA) [N/y/?] (NEW)

Common API for compressed memory storage (ZPOOL) [N/y/?] (NEW)

Low (Up to 2x) density storage for compressed pages (ZBUD) [N/y/?] (NEW)

Memory allocator for compressed pages (ZSMALLOC) [N/y/?] (NEW)

Enable idle page tracking (IDLE_PAGE_TRACKING) [N/y/?] (NEW)

Collect percpu memory statistics (PERCPU_STATS) [N/y/?] (NEW)

Enable seccomp to safely compute untrusted bytecode (SECCOMP) [N/y/?] (NEW)

Enable paravirtualization code (PARAVIRT) [N/y/?] (NEW)

Paravirtual steal time accounting (PARAVIRT_TIME_ACCOUNTING) [N/y/?] (NEW)

kexec system call (KEXEC) [N/y/?] (NEW)

Build kdump crash kernel (CRASH_DUMP) [N/y/?] (NEW)

Xen guest support on ARM64 (XEN) [N/y/?] (NEW)

Emulate Privileged Access Never using TTBR0_EL1 switching (ARM64_SW_TTBR0_PAN) [N/y/?] (NEW)

*

* ARMv8.1 architectural features

*

Support for hardware updates of the Access and Dirty page flags (ARM64_HW_AFDBM) [Y/n/?] (NEW)

Enable support for Privileged Access Never (PAN) (ARM64_PAN) [Y/n/?] (NEW)

Atomic instructions (ARM64_LSE_ATOMICS) [N/y/?] (NEW)

Enable support for Virtualization Host Extensions (VHE) (ARM64_VHE) [Y/n/?] (NEW)

*

* ARMv8.2 architectural features

*

Enable support for User Access Override (UAO) (ARM64_UAO) [Y/n/?] (NEW)

Enable support for persistent memory (ARM64_PMEM) [N/y/?] (NEW)

Randomize the address of the kernel image (RANDOMIZE_BASE) [N/y/?] (NEW)

*

* Boot options

*

Default kernel command string (CMDLINE) [] (NEW)

Always use the default kernel command string (CMDLINE_FORCE) [N/y/?] (NEW)

UEFI runtime support (EFI) [Y/n/?] (NEW)

Enable support for SMBIOS (DMI) tables (DMI) [Y/n/?] (NEW)

*

* Userspace binary formats

*

Kernel support for ELF binaries (BINFMT_ELF) [Y/n/?] (NEW)

Write ELF core dumps with partial segments (CORE_DUMP_DEFAULT_ELF_HEADERS) [Y/n/?] (NEW)

Kernel support for scripts starting with #! (BINFMT_SCRIPT) [Y/n/?] (NEW)

Kernel support for MISC binaries (BINFMT_MISC) [N/y/?] (NEW)

Kernel support for 32-bit EL0 (COMPAT) [N/y/?] (NEW)

*

* Power management options

*

Suspend to RAM and standby (SUSPEND) [Y/n/?] (NEW)

Hibernation (aka 'suspend to disk') (HIBERNATION) [N/y/?] (NEW)

Opportunistic sleep (PM_AUTOSLEEP) [N/y/?] (NEW)

User space wakeup sources interface (PM_WAKELOCKS) [N/y/?] (NEW)

Device power management core functionality (PM) [Y/?] (NEW) y

Power Management Debug Support (PM_DEBUG) [N/y/?] (NEW)

Enable workqueue power-efficient mode by default (WQ_POWER_EFFICIENT_DEFAULT) [N/y/?] (NEW)

*

* CPU Power Management

*

*

* CPU Idle

*

CPU idle PM support (CPU_IDLE) [N/y/?] (NEW)

*

* CPU Frequency scaling

*

CPU Frequency scaling (CPU_FREQ) [N/y/?] (NEW)

*

* Networking support

*

Networking support (NET) [N/y/?] (NEW)

*

* Device Drivers

*

*

* Generic Driver Options

*

Support for uevent helper (UEVENT_HELPER) [Y/n/?] (NEW)

path to uevent helper (UEVENT_HELPER_PATH) [] (NEW)

Maintain a devtmpfs filesystem to mount at /dev (DEVTMPFS) [N/y/?] (NEW)

Select only drivers that don't need compile-time external firmware (STANDALONE) [Y/n/?] (NEW)

Prevent firmware from being built (PREVENT_FIRMWARE_BUILD) [Y/n/?] (NEW)

Userspace firmware loading support (FW_LOADER) [Y/?] y

Include in-kernel firmware blobs in kernel binary (FIRMWARE_IN_KERNEL) [Y/n/?] (NEW)

External firmware blobs to build into the kernel binary (EXTRA_FIRMWARE) [] (NEW)

Fallback user-helper invocation for firmware loading (FW_LOADER_USER_HELPER_FALLBACK) [N/y/?] (NEW)

*

* Bus devices

*

Broadcom STB GISB bus arbiter (BRCMSTB_GISB_ARB) [N/y/?] (NEW)

Simple Power-Managed Bus Driver (SIMPLE_PM_BUS) [N/y/?] (NEW)

Versatile Express configuration bus (VEXPRESS_CONFIG) [N/y/?] (NEW)

*

* Memory Technology Device (MTD) support

*

Memory Technology Device (MTD) support (MTD) [N/y/?] (NEW)

*

* Device Tree and Open Firmware support

*

Device Tree and Open Firmware support (OF) [Y/?] (NEW) y

Device Tree runtime unit tests (OF_UNITTEST) [N/y/?] (NEW)

Device Tree overlays (OF_OVERLAY) [N/y/?] (NEW)

*

* Parallel port support

*

Parallel port support (PARPORT) [N/y/?] (NEW)

*

* Block devices

*

Block devices (BLK_DEV) [Y/n/?] (NEW)

Null test block driver (BLK_DEV_NULL_BLK) [N/y] (NEW)

Loopback device support (BLK_DEV_LOOP) [N/y/?] (NEW)

*

* DRBD disabled because PROC_FS or INET not selected

*

RAM block device support (BLK_DEV_RAM) [N/y/?] (NEW)

Packet writing on CD/DVD media (DEPRECATED) (CDROM_PKTCDVD) [N/y/?] (NEW)

NVM Express over Fabrics FC host driver (NVME_FC) [N/y/?] (NEW)

*

* Misc devices

*

Dummy IRQ handler (DUMMY_IRQ) [N/y/?] (NEW)

Enclosure Services (ENCLOSURE_SERVICES) [N/y/?] (NEW)

Generic on-chip SRAM driver (SRAM) [N/y/?] (NEW)

*

* Silicon Labs C2 port support

*

Silicon Labs C2 port support (C2PORT) [N/y/?] (NEW)

*

* EEPROM support

*

EEPROM 93CX6 support (EEPROM_93CX6) [N/y/?] (NEW)

*

* Texas Instruments shared transport line discipline

*

*

* Altera FPGA firmware download module

*

*

* Intel MIC Bus Driver

*

*

* SCIF Bus Driver

*

*

* VOP Bus Driver

*

*

* Intel MIC Host Driver

*

*

* Intel MIC Card Driver

*

*

* SCIF Driver

*

*

* Intel MIC Coprocessor State Management (COSM) Drivers

*

*

* VOP Driver

*

Line Echo Canceller support (ECHO) [N/y/?] (NEW)

*

* SCSI device support

*

RAID Transport Class (RAID_ATTRS) [N/y/?] (NEW)

SCSI device support (SCSI) [N/y/?] (NEW)

*

* Serial ATA and Parallel ATA drivers (libata)

*

Serial ATA and Parallel ATA drivers (libata) (ATA) [N/y/?] (NEW)

*

* Multiple devices driver support (RAID and LVM)

*

Multiple devices driver support (RAID and LVM) (MD) [N/y/?] (NEW)

*

* Open-Channel SSD target support

*

Open-Channel SSD target support (NVM) [N/y/?] (NEW)

*

* Input device support

*

Generic input layer (needed for keyboard, mouse, ...) (INPUT) [Y/?] y

Support for memoryless force-feedback devices (INPUT_FF_MEMLESS) [N/y/?] (NEW)

Polled input device skeleton (INPUT_POLLDEV) [N/y/?] (NEW)

Sparse keymap support library (INPUT_SPARSEKMAP) [N/y/?] (NEW)

Matrix keymap support library (INPUT_MATRIXKMAP) [N/y/?] (NEW)

*

* Userland interfaces

*

Mouse interface (INPUT_MOUSEDEV) [N/y/?] (NEW)

Joystick interface (INPUT_JOYDEV) [N/y/?] (NEW)

Event interface (INPUT_EVDEV) [N/y/?] (NEW)

Event debugging (INPUT_EVBUG) [N/y/?] (NEW)

*

* Input Device Drivers

*

*

* Keyboards

*

Keyboards (INPUT_KEYBOARD) [Y/n/?] (NEW)

AT keyboard (KEYBOARD_ATKBD) [Y/n/?] (NEW)

DECstation/VAXstation LK201/LK401 keyboard (KEYBOARD_LKKBD) [N/y/?] (NEW)

Newton keyboard (KEYBOARD_NEWTON) [N/y/?] (NEW)

OpenCores Keyboard Controller (KEYBOARD_OPENCORES) [N/y/?] (NEW)

Samsung keypad support (KEYBOARD_SAMSUNG) [N/y/?] (NEW)

Stowaway keyboard (KEYBOARD_STOWAWAY) [N/y/?] (NEW)

Sun Type 4 and Type 5 keyboard (KEYBOARD_SUNKBD) [N/y/?] (NEW)

TI OMAP4+ keypad support (KEYBOARD_OMAP4) [N/y/?] (NEW)

XT keyboard (KEYBOARD_XTKBD) [N/y/?] (NEW)

Broadcom keypad driver (KEYBOARD_BCM) [N/y/?] (NEW)

*

* Mice

*

Mice (INPUT_MOUSE) [Y/n/?] (NEW)

PS/2 mouse (MOUSE_PS2) [Y/n/?] (NEW)

Elantech PS/2 protocol extension (MOUSE_PS2_ELANTECH) [N/y/?] (NEW)

Sentelic Finger Sensing Pad PS/2 protocol extension (MOUSE_PS2_SENTELIC) [N/y/?] (NEW)

eGalax TouchKit PS/2 protocol extension (MOUSE_PS2_TOUCHKIT) [N/y/?] (NEW)

Serial mouse (MOUSE_SERIAL) [N/y/?] (NEW)

Apple USB Touchpad support (MOUSE_APPLETOUCH) [N/y/?] (NEW)

Apple USB BCM5974 Multitouch trackpad support (MOUSE_BCM5974) [N/y/?] (NEW)

DEC VSXXX-AA/GA mouse and VSXXX-AB tablet (MOUSE_VSXXXAA) [N/y/?] (NEW)

Synaptics USB device support (MOUSE_SYNAPTICS_USB) [N/y/?] (NEW)

*

* Joysticks/Gamepads

*

Joysticks/Gamepads (INPUT_JOYSTICK) [N/y/?] (NEW)

*

* Tablets

*

Tablets (INPUT_TABLET) [N/y/?] (NEW)

*

* Touchscreens

*

Touchscreens (INPUT_TOUCHSCREEN) [N/y/?] (NEW)

*

* Miscellaneous devices

*

Miscellaneous devices (INPUT_MISC) [N/y/?] (NEW)

Synaptics RMI4 bus support (RMI4_CORE) [N/y/?] (NEW)

*

* Hardware I/O ports

*

Serial I/O support (SERIO) [Y/?] (NEW) y

Serial port line discipline (SERIO_SERPORT) [Y/n/?] (NEW)

AMBA KMI keyboard controller (SERIO_AMBAKMI) [N/y] (NEW)

PS/2 driver library (SERIO_LIBPS2) [Y/?] (NEW) y

Raw access to serio ports (SERIO_RAW) [N/y/?] (NEW)

Altera UP PS/2 controller (SERIO_ALTERA_PS2) [N/y/?] (NEW)

TQC PS/2 multiplexer (SERIO_PS2MULT) [N/y/?] (NEW)

ARC PS/2 support (SERIO_ARC_PS2) [N/y/?] (NEW)

GRLIB APBPS2 PS/2 keyboard/mouse controller (SERIO_APBPS2) [N/y/?] (NEW)

User space serio port driver support (USERIO) [N/y/?] (NEW)

Gameport support (GAMEPORT) [N/y/?] (NEW)

*

* Character devices

*

Enable TTY (TTY) [Y/?] y

Virtual terminal (VT) [Y/?] y

Support for binding and unbinding console drivers (VT_HW_CONSOLE_BINDING) [N/y/?] (NEW)

Legacy (BSD) PTY support (LEGACY_PTYS) [Y/n/?] (NEW)

Maximum number of legacy PTY in use (LEGACY_PTY_COUNT) [256] (NEW)

Non-standard serial port support (SERIAL_NONSTANDARD) [N/y/?] (NEW)

Trace data sink for MIPI P1149.7 cJTAG standard (TRACE_SINK) [N/y/?] (NEW)

/dev/mem virtual device support (DEVMEM) [Y/n/?] (NEW)

*

* Serial drivers

*

8250/16550 and compatible serial support (SERIAL_8250) [N/y/?] (NEW)

*

* Non-8250 serial port support

*

ARM AMBA PL010 serial port support (SERIAL_AMBA_PL010) [N/y/?] (NEW)

ARM AMBA PL011 serial port support (SERIAL_AMBA_PL011) [N/y/?] (NEW)

Early console using ARM semihosting (SERIAL_EARLYCON_ARM_SEMIHOST) [N/y/?] (NEW)

Xilinx uartlite serial port support (SERIAL_UARTLITE) [N/y/?] (NEW)

SCCNXP serial port support (SERIAL_SCCNXP) [N/y/?] (NEW)

Altera JTAG UART support (SERIAL_ALTERA_JTAGUART) [N/y/?] (NEW)

Altera UART support (SERIAL_ALTERA_UART) [N/y/?] (NEW)

Cadence (Xilinx Zynq) UART support (SERIAL_XILINX_PS_UART) [N/y/?] (NEW)

ARC UART driver support (SERIAL_ARC) [N/y/?] (NEW)

Freescale lpuart serial port support (SERIAL_FSL_LPUART) [N/y/?] (NEW)

Conexant Digicolor CX92xxx USART serial port support (SERIAL_CONEXANT_DIGICOLOR) [N/y/?] (NEW)

*

* Serial device bus

*

Serial device bus (SERIAL_DEV_BUS) [N/y/?] (NEW)

ARM JTAG DCC console (HVC_DCC) [N/y/?] (NEW)

*

* IPMI top-level message handler

*

IPMI top-level message handler (IPMI_HANDLER) [N/y/?] (NEW)

*

* Hardware Random Number Generator Core support

*

Hardware Random Number Generator Core support (HW_RANDOM) [Y/n/?] (NEW)

Timer IOMEM HW Random Number Generator support (HW_RANDOM_TIMERIOMEM) [N/y/?] (NEW)

Siemens R3964 line discipline (R3964) [N/y/?] (NEW)

*

* PCMCIA character devices

*

RAW driver (/dev/raw/rawN) (RAW_DRIVER) [N/y/?] (NEW)

*

* TPM Hardware Support

*

TPM Hardware Support (TCG_TPM) [N/y/?] (NEW)

Xillybus generic FPGA interface (XILLYBUS) [N/y/?] (NEW)

*

* I2C support

*

I2C support (I2C) [N/y/?] (NEW)

*

* SPI support

*

SPI support (SPI) [N/y/?] (NEW)

*

* SPMI support

*

SPMI support (SPMI) [N/y/?] (NEW)

*

* HSI support

*

HSI support (HSI) [N/y/?] (NEW)

*

* PPS support

*

PPS support (PPS) [N/y/?] (NEW)

*

* PTP clock support

*

*

* Enable PHYLIB and NETWORK_PHY_TIMESTAMPING to see the additional clocks.

*

*

* GPIO Support

*

GPIO Support (GPIOLIB) [N/y/?] (NEW)

*

* Dallas's 1-wire support

*

Dallas's 1-wire support (W1) [N/y/?] (NEW)

*

* Adaptive Voltage Scaling class support

*

Adaptive Voltage Scaling class support (POWER_AVS) [N/y/?] (NEW)

*

* Board level reset or power off

*

Board level reset or power off (POWER_RESET) [Y/?] (NEW) y

Restart power-off driver (POWER_RESET_RESTART) [N/y/?] (NEW)

APM SoC X-Gene reset driver (POWER_RESET_XGENE) [N/y/?] (NEW)

Generic SYSCON regmap reset driver (POWER_RESET_SYSCON) [N/y/?] (NEW)

Generic SYSCON regmap poweroff driver (POWER_RESET_SYSCON_POWEROFF) [N/y/?] (NEW)

*

* Power supply class support

*

Power supply class support (POWER_SUPPLY) [Y/?] (NEW) y

Power supply debug (POWER_SUPPLY_DEBUG) [N/y/?] (NEW)

Generic PDA/phone power driver (PDA_POWER) [N/y/?] (NEW)

Test power driver (TEST_POWER) [N/y/?] (NEW)

DS2780 battery driver (BATTERY_DS2780) [N/y/?] (NEW)

DS2781 battery driver (BATTERY_DS2781) [N/y/?] (NEW)

BQ27xxx battery driver (BATTERY_BQ27XXX) [N/y/?] (NEW)

MAX8903 Battery DC-DC Charger for USB and Adapter Power (CHARGER_MAX8903) [N/y/?] (NEW)

*

* Hardware Monitoring support

*

Hardware Monitoring support (HWMON) [Y/n/?] (NEW)

Hardware Monitoring Chip debugging messages (HWMON_DEBUG_CHIP) [N/y/?] (NEW)

*

* Native drivers

*

ASPEED AST2400/AST2500 PWM and Fan tach driver (SENSORS_ASPEED) [N/y/?] (NEW)

Fintek F71805F/FG, F71806F/FG and F71872F/FG (SENSORS_F71805F) [N/y/?] (NEW)

Fintek F71882FG and compatibles (SENSORS_F71882FG) [N/y/?] (NEW)

ITE IT87xx and compatibles (SENSORS_IT87) [N/y/?] (NEW)

Maxim MAX197 and compatibles (SENSORS_MAX197) [N/y/?] (NEW)

National Semiconductor PC87360 family (SENSORS_PC87360) [N/y/?] (NEW)

National Semiconductor PC87427 (SENSORS_PC87427) [N/y/?] (NEW)

NTC thermistor support from Murata (SENSORS_NTC_THERMISTOR) [N/y/?] (NEW)

Nuvoton NCT6683D (SENSORS_NCT6683) [N/y/?] (NEW)

Nuvoton NCT6775F and compatibles (SENSORS_NCT6775) [N/y/?] (NEW)

SMSC LPC47M10x and compatibles (SENSORS_SMSC47M1) [N/y/?] (NEW)

SMSC LPC47B397-NC (SENSORS_SMSC47B397) [N/y/?] (NEW)

VIA VT1211 (SENSORS_VT1211) [N/y/?] (NEW)

Winbond W83627HF, W83627THF, W83637HF, W83687THF, W83697HF (SENSORS_W83627HF) [N/y/?] (NEW)

Winbond W83627EHF/EHG/DHG/UHG, W83667HG, NCT6775F, NCT6776F (SENSORS_W83627EHF) [N/y/?] (NEW)

*

* Generic Thermal sysfs driver

*

Generic Thermal sysfs driver (THERMAL) [N/y/?] (NEW)

*

* Watchdog Timer Support

*

Watchdog Timer Support (WATCHDOG) [N/y/?] (NEW)

*

* Sonics Silicon Backplane

*

Sonics Silicon Backplane support (SSB) [N/y/?] (NEW)

*

* Broadcom specific AMBA

*

Broadcom specific AMBA (BCMA) [N/y/?] (NEW)

*

* Multifunction device drivers

*

Atmel Flexcom (Flexible Serial Communication Unit) (MFD_ATMEL_FLEXCOM) [N/y/?] (NEW)

Atmel HLCDC (High-end LCD Controller) (MFD_ATMEL_HLCDC) [N/y/?] (NEW)

ChromeOS Embedded Controller (MFD_CROS_EC) [N/y/?] (NEW)

HiSilicon Hi6421 PMU/Codec IC (MFD_HI6421_PMIC) [N/y/?] (NEW)

HTC PASIC3 LED/DS1WM chip support (HTC_PASIC3) [N/y/?] (NEW)

Kontron module PLD device (MFD_KEMPLD) [N/y/?] (NEW)

MediaTek MT6397 PMIC Support (MFD_MT6397) [N/y/?] (NEW)

Silicon Motion SM501 (MFD_SM501) [N/y/?] (NEW)

ST-Ericsson ABX500 Mixed Signal Circuit register functions (ABX500_CORE) [N/y/?] (NEW)

System Controller Register R/W Based on Regmap (MFD_SYSCON) [N/y/?] (NEW)

TI ADC / Touch Screen chip support (MFD_TI_AM335X_TSCADC) [N/y/?] (NEW)

*

* Voltage and Current Regulator Support

*

Voltage and Current Regulator Support (REGULATOR) [N/y/?] (NEW)

*

* Remote Controller support

*

Remote Controller support (RC_CORE) [Y/n/?] (NEW)

Compile Remote Controller keymap modules (RC_MAP) [Y/n/?] (NEW)

*

* Remote controller decoders

*

Remote controller decoders (RC_DECODERS) [Y/n] (NEW)

LIRC interface driver (LIRC) [N/y/?] (NEW)

Enable IR raw decoder for the NEC protocol (IR_NEC_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the RC-5 protocol (IR_RC5_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the RC6 protocol (IR_RC6_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the JVC protocol (IR_JVC_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the Sony protocol (IR_SONY_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the Sanyo protocol (IR_SANYO_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the Sharp protocol (IR_SHARP_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the MCE keyboard/mouse protocol (IR_MCE_KBD_DECODER) [Y/n/?] (NEW)

Enable IR raw decoder for the XMP protocol (IR_XMP_DECODER) [Y/n/?] (NEW)

*

* Remote Controller devices

*

Remote Controller devices (RC_DEVICES) [N/y] (NEW)

*

* Multimedia support

*

Multimedia support (MEDIA_SUPPORT) [N/y/?] (NEW)

*

* Graphics support

*

*

* Direct Rendering Manager (XFree86 4.1.0 and higher DRI support)

*

Direct Rendering Manager (XFree86 4.1.0 and higher DRI support) (DRM) [N/y/?] (NEW)

*

* ACP (Audio CoProcessor) Configuration

*

*

* Frame buffer Devices

*

*

* Support for frame buffer devices

*

Support for frame buffer devices (FB) [N/y/?] (NEW)

*

* Backlight & LCD device support

*

Backlight & LCD device support (BACKLIGHT_LCD_SUPPORT) [N/y/?] (NEW)

*

* Console display driver support

*

Initial number of console screen columns (DUMMY_CONSOLE_COLUMNS) [80] (NEW)

Initial number of console screen rows (DUMMY_CONSOLE_ROWS) [25] (NEW)

*

* Sound card support

*

Sound card support (SOUND) [N/y/?] (NEW)

*

* HID support

*

HID bus support (HID) [Y/n/?] (NEW)

Battery level reporting for HID devices (HID_BATTERY_STRENGTH) [N/y/?] (NEW)

/dev/hidraw raw HID device support (HIDRAW) [N/y/?] (NEW)

User-space I/O driver support for HID subsystem (UHID) [N/y/?] (NEW)

Generic HID driver (HID_GENERIC) [Y/n/?] (NEW)

*

* Special HID drivers

*

A4 tech mice (HID_A4TECH) [Y/n/?] (NEW)

ACRUX game controller support (HID_ACRUX) [N/y/?] (NEW)

Apple {i,Power,Mac}Books (HID_APPLE) [Y/n/?] (NEW)

Aureal (HID_AUREAL) [N/y/?] (NEW)

Belkin Flip KVM and Wireless keyboard (HID_BELKIN) [Y/n/?] (NEW)

Cherry Cymotion keyboard (HID_CHERRY) [Y/n/?] (NEW)

Chicony devices (HID_CHICONY) [Y/n/?] (NEW)

CMedia CM6533 HID audio jack controls (HID_CMEDIA) [N/y/?] (NEW)

Cypress mouse and barcode readers (HID_CYPRESS) [Y/n/?] (NEW)

DragonRise Inc. game controller (HID_DRAGONRISE) [N/y/?] (NEW)

EMS Production Inc. force feedback support (HID_EMS_FF) [N/y/?] (NEW)

ELECOM HID devices (HID_ELECOM) [N/y/?] (NEW)

Ezkey BTC 8193 keyboard (HID_EZKEY) [Y/n/?] (NEW)

Gembird Joypad (HID_GEMBIRD) [N/y/?] (NEW)

Google Fiber TV Box remote control support (HID_GFRM) [N/y/?] (NEW)

Keytouch HID devices (HID_KEYTOUCH) [N/y/?] (NEW)

KYE/Genius devices (HID_KYE) [N/y/?] (NEW)

Waltop (HID_WALTOP) [N/y/?] (NEW)

Gyration remote control (HID_GYRATION) [N/y/?] (NEW)

ION iCade arcade controller (HID_ICADE) [N/y/?] (NEW)

ITE devices (HID_ITE) [Y/n/?] (NEW)

Twinhan IR remote control (HID_TWINHAN) [N/y/?] (NEW)

Kensington Slimblade Trackball (HID_KENSINGTON) [Y/n/?] (NEW)

LC-Power (HID_LCPOWER) [N/y/?] (NEW)

Lenovo / Thinkpad devices (HID_LENOVO) [N/y/?] (NEW)

Logitech devices (HID_LOGITECH) [Y/n/?] (NEW)

Logitech HID++ devices support (HID_LOGITECH_HIDPP) [N/y/?] (NEW)

Logitech force feedback support (LOGITECH_FF) [N/y/?] (NEW)

Logitech force feedback support (variant 2) (LOGIRUMBLEPAD2_FF) [N/y/?] (NEW)

Logitech Flight System G940 force feedback support (LOGIG940_FF) [N/y/?] (NEW)

Logitech wheels configuration and force feedback support (LOGIWHEELS_FF) [N/y/?] (NEW)

Apple Magic Mouse/Trackpad multi-touch support (HID_MAGICMOUSE) [N/y/?] (NEW)

Mayflash game controller adapter force feedback (HID_MAYFLASH) [N/y/?] (NEW)

Microsoft non-fully HID-compliant devices (HID_MICROSOFT) [Y/n/?] (NEW)

Monterey Genius KB29E keyboard (HID_MONTEREY) [Y/n/?] (NEW)

HID Multitouch panels (HID_MULTITOUCH) [N/y/?] (NEW)

NTI keyboard adapters (HID_NTI) [N/y/?] (NEW)

Ortek PKB-1700/WKB-2000/Skycable wireless keyboard and mouse trackpad (HID_ORTEK) [N/y/?] (NEW)

Pantherlord/GreenAsia game controller (HID_PANTHERLORD) [N/y/?] (NEW)

Petalynx Maxter remote control (HID_PETALYNX) [N/y/?] (NEW)

PicoLCD (graphic version) (HID_PICOLCD) [N/y/?] (NEW)

Plantronics USB HID Driver (HID_PLANTRONICS) [N/y/?] (NEW)

Primax non-fully HID-compliant devices (HID_PRIMAX) [N/y/?] (NEW)

Saitek (Mad Catz) non-fully HID-compliant devices (HID_SAITEK) [N/y/?] (NEW)

Samsung InfraRed remote control or keyboards (HID_SAMSUNG) [N/y/?] (NEW)

Speedlink VAD Cezanne mouse support (HID_SPEEDLINK) [N/y/?] (NEW)

Steelseries SRW-S1 steering wheel support (HID_STEELSERIES) [N/y/?] (NEW)

Sunplus wireless desktop (HID_SUNPLUS) [N/y/?] (NEW)

Synaptics RMI4 device support (HID_RMI) [N/y/?] (NEW)

GreenAsia (Product ID 0x12) game controller support (HID_GREENASIA) [N/y/?] (NEW)

SmartJoy PLUS PS2/USB adapter support (HID_SMARTJOYPLUS) [N/y/?] (NEW)

TiVo Slide Bluetooth remote control support (HID_TIVO) [N/y/?] (NEW)

TopSeed Cyberlink, BTC Emprex, Conceptronic remote control support (HID_TOPSEED) [N/y/?] (NEW)

ThrustMaster devices support (HID_THRUSTMASTER) [N/y/?] (NEW)

THQ PS3 uDraw tablet (HID_UDRAW_PS3) [N/y/?] (NEW)

Xin-Mo non-fully compliant devices (HID_XINMO) [N/y/?] (NEW)

Zeroplus based game controller support (HID_ZEROPLUS) [N/y/?] (NEW)

Zydacron remote control support (HID_ZYDACRON) [N/y/?] (NEW)

HID Sensors framework support (HID_SENSOR_HUB) [N/y/?] (NEW)

Alps HID device support (HID_ALPS) [N/y/?] (NEW)

*

* USB support

*

USB support (USB_SUPPORT) [Y/n/?] (NEW)

Support for Host-side USB (USB) [N/y/?] (NEW)

*

* USB port drivers

*

*

* USB Physical Layer drivers

*

NOP USB Transceiver Driver (NOP_USB_XCEIV) [N/y/?] (NEW)

Generic ULPI Transceiver Driver (USB_ULPI) [N/y/?] (NEW)

*

* USB Gadget Support

*

USB Gadget Support (USB_GADGET) [N/y/?] (NEW)

*

* USB Power Delivery and Type-C drivers

*

USB Type-C Connector System Software Interface driver (TYPEC_UCSI) [N/y/?] (NEW)

USB ULPI PHY interface support (USB_ULPI_BUS) [N/y/?] (NEW)

*

* Ultra Wideband devices

*

Ultra Wideband devices (UWB) [N/y/?] (NEW)

*

* MMC/SD/SDIO card support

*

MMC/SD/SDIO card support (MMC) [N/y/?] (NEW)

*

* Sony MemoryStick card support

*

Sony MemoryStick card support (MEMSTICK) [N/y/?] (NEW)

*

* LED Support

*

LED Support (NEW_LEDS) [N/y/?] (NEW)

*

* Accessibility support

*

Accessibility support (ACCESSIBILITY) [N/y/?] (NEW)

*

* Real Time Clock

*

Real Time Clock (RTC_CLASS) [N/y/?] (NEW)

*

* DMA Engine support

*

DMA Engine support (DMADEVICES) [N/y/?] (NEW)

*

* DMABUF options

*

Explicit Synchronization Framework (SYNC_FILE) [N/y/?] (NEW)

*

* Auxiliary Display support

*

Auxiliary Display support (AUXDISPLAY) [N/y/?] (NEW)

*

* Userspace I/O drivers

*

Userspace I/O drivers (UIO) [N/y/?] (NEW)

*

* VFIO Non-Privileged userspace driver framework

*

VFIO Non-Privileged userspace driver framework (VFIO) [N/y/?] (NEW)

*

* Virtualization drivers

*

Virtualization drivers (VIRT_DRIVERS) [N/y/?] (NEW)

*

* Virtio drivers

*

Platform bus driver for memory mapped virtio devices (VIRTIO_MMIO) [N/y/?] (NEW)

*

* Microsoft Hyper-V guest support

*

*

* Staging drivers

*

Staging drivers (STAGING) [N/y/?] (NEW)

*

* Platform support for Goldfish virtual devices

*

Platform support for Goldfish virtual devices (GOLDFISH) [N/y/?] (NEW)

*

* Platform support for Chrome hardware

*

Platform support for Chrome hardware (CHROME_PLATFORMS) [N/y/?] (NEW)

*

* Common Clock Framework

*

Clock driver for ARM Reference designs (COMMON_CLK_VERSATILE) [N/y/?] (NEW)

PLL Driver for HSDK platform (CLK_HSDK) [N/y/?] (NEW)

Clock driver for Freescale QorIQ platforms (CLK_QORIQ) [N/y/?] (NEW)

Clock driver for APM XGene SoC (COMMON_CLK_XGENE) [Y/n/?] (NEW)

*

* Hardware Spinlock drivers

*

Hardware Spinlock drivers (HWSPINLOCK) [N/y] (NEW)

*

* Clock Source drivers

*

Enable ARM architected timer event stream generation by default (ARM_ARCH_TIMER_EVTSTREAM) [Y/n/?] (NEW)

Workaround for Freescale/NXP Erratum A-008585 (FSL_ERRATUM_A008585) [Y/n/?] (NEW)

Workaround for Hisilicon Erratum 161010101 (HISILICON_ERRATUM_161010101) [Y/n/?] (NEW)

Workaround for Cortex-A73 erratum 858921 (ARM64_ERRATUM_858921) [Y/n/?] (NEW)

Support for Dual Timer SP804 module (ARM_TIMER_SP804) [N/y] (NEW)

*

* Mailbox Hardware Support

*

Mailbox Hardware Support (MAILBOX) [N/y/?] (NEW)

*

* IOMMU Hardware Support

*

IOMMU Hardware Support (IOMMU_SUPPORT) [Y/n/?] (NEW)

*

* Generic IOMMU Pagetable Support

*

ARMv7/v8 Long Descriptor Format (IOMMU_IO_PGTABLE_LPAE) [N/y/?] (NEW)

ARMv7/v8 Short Descriptor Format (IOMMU_IO_PGTABLE_ARMV7S) [N/y/?] (NEW)

ARM Ltd. System MMU (SMMU) Support (ARM_SMMU) [N/y/?] (NEW)

ARM Ltd. System MMU Version 3 (SMMUv3) Support (ARM_SMMU_V3) [N/y/?] (NEW)

*

* Remoteproc drivers

*

Support for Remote Processor subsystem (REMOTEPROC) [N/y/?] (NEW)

*

* Rpmsg drivers

*

*

* SOC (System On Chip) specific Drivers

*

*

* Amlogic SoC drivers

*

*

* Broadcom SoC drivers

*

Broadcom STB SoC drivers (SOC_BRCMSTB) [N/y/?] (NEW)

*

* i.MX SoC drivers

*

*

* Qualcomm SoC drivers

*

*

* TI SOC drivers support

*

TI SOC drivers support (SOC_TI) [N/y] (NEW)

*

* Generic Dynamic Voltage and Frequency Scaling (DVFS) support

*

Generic Dynamic Voltage and Frequency Scaling (DVFS) support (PM_DEVFREQ) [N/y/?] (NEW)

*

* External Connector Class (extcon) support

*

External Connector Class (extcon) support (EXTCON) [N/y/?] (NEW)

*

* Memory Controller drivers

*

Memory Controller drivers (MEMORY) [N/y] (NEW)

*

* Industrial I/O support

*

Industrial I/O support (IIO) [N/y/?] (NEW)

*

* Pulse-Width Modulation (PWM) Support

*

Pulse-Width Modulation (PWM) Support (PWM) [N/y/?] (NEW)

*

* IndustryPack bus support

*

IndustryPack bus support (IPACK_BUS) [N/y/?] (NEW)

*

* Reset Controller Support

*

Reset Controller Support (RESET_CONTROLLER) [N/y/?] (NEW)

*

* FMC support

*

FMC support (FMC) [N/y/?] (NEW)

*

* PHY Subsystem

*

PHY Core (GENERIC_PHY) [N/y/?] (NEW)

APM X-Gene 15Gbps PHY support (PHY_XGENE) [N/y/?] (NEW)

Broadcom Kona USB2 PHY Driver (BCM_KONA_USB2_PHY) [N/y/?] (NEW)

Marvell USB HSIC 28nm PHY Driver (PHY_PXA_28NM_HSIC) [N/y/?] (NEW)

Marvell USB 2.0 28nm PHY Driver (PHY_PXA_28NM_USB2) [N/y/?] (NEW)

*

* Generic powercap sysfs driver

*

Generic powercap sysfs driver (POWERCAP) [N/y/?] (NEW)

*

* MCB support

*

MCB support (MCB) [N/y/?] (NEW)

*

* Reliability, Availability and Serviceability (RAS) features

*

Reliability, Availability and Serviceability (RAS) features (RAS) [N/y/?] (NEW)

*

* Android

*

Android Drivers (ANDROID) [N/y/?] (NEW)

*

* NVDIMM (Non-Volatile Memory Device) Support

*

NVDIMM (Non-Volatile Memory Device) Support (LIBNVDIMM) [N/y/?] (NEW)

*

* DAX: direct access to differentiated memory

*

DAX: direct access to differentiated memory (DAX) [N/y] (NEW)

*

* NVMEM Support

*

NVMEM Support (NVMEM) [N/y/?] (NEW)

System Trace Module devices (STM) [N/y/?] (NEW)

Intel(R) Trace Hub controller (INTEL_TH) [N/y/?] (NEW)

*

* FPGA Configuration Framework

*

FPGA Configuration Framework (FPGA) [N/y/?] (NEW)

*

* FSI support

*

FSI support (FSI) [N/y/?] (NEW)

Trusted Execution Environment support (TEE) [N/y/?] (NEW)

*

* Firmware Drivers

*

ARM PSCI checker (ARM_PSCI_CHECKER) [N/y/?] (NEW)

Export DMI identification via sysfs to userspace (DMIID) [Y/n/?] (NEW)

DMI table support in sysfs (DMI_SYSFS) [N/y/?] (NEW)

*

* Google Firmware Drivers

*

Google Firmware Drivers (GOOGLE_FIRMWARE) [N/y/?] (NEW)

*

* EFI (Extensible Firmware Interface) Support

*

EFI Variable Support via sysfs (EFI_VARS) [N/y/?] (NEW)

EFI capsule loader (EFI_CAPSULE_LOADER) [N/y/?] (NEW)

EFI Runtime Service Tests Support (EFI_TEST) [N/y/?] (NEW)

Reset memory attack mitigation (RESET_ATTACK_MITIGATION) [N/y/?] (NEW)

*

* Tegra firmware driver

*

*

* File systems

*

Second extended fs support (EXT2_FS) [N/y/?] (NEW)

The Extended 3 (ext3) filesystem (EXT3_FS) [N/y/?] (NEW)

The Extended 4 (ext4) filesystem (EXT4_FS) [N/y/?] (NEW)

Reiserfs support (REISERFS_FS) [N/y/?] (NEW)

JFS filesystem support (JFS_FS) [N/y/?] (NEW)

XFS filesystem support (XFS_FS) [N/y/?] (NEW)

GFS2 file system support (GFS2_FS) [N/y/?] (NEW)

Btrfs filesystem support (BTRFS_FS) [N/y/?] (NEW)

NILFS2 file system support (NILFS2_FS) [N/y/?] (NEW)

F2FS filesystem support (F2FS_FS) [N/y/?] (NEW)

Direct Access (DAX) support (FS_DAX) [N/y/?] (NEW)

Enable filesystem export operations for block IO (EXPORTFS_BLOCK_OPS) [N/y/?] (NEW)

Enable POSIX file locking API (FILE_LOCKING) [Y/?] y

Enable Mandatory file locking (MANDATORY_FILE_LOCKING) [Y/n/?] (NEW)

FS Encryption (Per-file encryption) (FS_ENCRYPTION) [N/y/?] (NEW)

Dnotify support (DNOTIFY) [Y/n/?] (NEW)

Inotify support for userspace (INOTIFY_USER) [Y/n/?] (NEW)

Filesystem wide access notification (FANOTIFY) [N/y/?] (NEW)

Quota support (QUOTA) [N/y/?] (NEW)

Kernel automounter version 4 support (also supports v3) (AUTOFS4_FS) [N/y/?] (NEW)

FUSE (Filesystem in Userspace) support (FUSE_FS) [N/y/?] (NEW)

Overlay filesystem support (OVERLAY_FS) [N/y/?] (NEW)

*

* Caches

*

General filesystem local caching manager (FSCACHE) [N/y/?] (NEW)

*

* CD-ROM/DVD Filesystems

*

ISO 9660 CDROM file system support (ISO9660_FS) [N/y/?] (NEW)

UDF file system support (UDF_FS) [N/y/?] (NEW)

*

* DOS/FAT/NT Filesystems

*

MSDOS fs support (MSDOS_FS) [N/y/?] (NEW)

VFAT (Windows-95) fs support (VFAT_FS) [N/y/?] (NEW)

NTFS file system support (NTFS_FS) [N/y/?] (NEW)

*

* Pseudo filesystems

*

/proc file system support (PROC_FS) [Y/?] y

/proc/kcore support (PROC_KCORE) [N/y/?] (NEW)

Include /proc/<pid>/task/<tid>/children file (PROC_CHILDREN) [N/y/?] (NEW)

Tmpfs virtual memory file system support (former shm fs) (TMPFS) [N/y/?] (NEW)

HugeTLB file system support (HUGETLBFS) [N/y/?] (NEW)

Userspace-driven configuration filesystem (CONFIGFS_FS) [N/y/?] (NEW)

EFI Variable filesystem (EFIVAR_FS) [Y/n/?] (NEW)

*

* Miscellaneous filesystems

*

Miscellaneous filesystems (MISC_FILESYSTEMS) [Y/n/?] (NEW)

ORANGEFS (Powered by PVFS) support (ORANGEFS_FS) [N/y/?] (NEW)

ADFS file system support (ADFS_FS) [N/y/?] (NEW)

Amiga FFS file system support (AFFS_FS) [N/y/?] (NEW)

Apple Macintosh file system support (HFS_FS) [N/y/?] (NEW)

Apple Extended HFS file system support (HFSPLUS_FS) [N/y/?] (NEW)

BeOS file system (BeFS) support (read only) (BEFS_FS) [N/y/?] (NEW)

BFS file system support (BFS_FS) [N/y/?] (NEW)

EFS file system support (read only) (EFS_FS) [N/y/?] (NEW)

Compressed ROM file system support (cramfs) (OBSOLETE) (CRAMFS) [N/y/?] (NEW)

SquashFS 4.0 - Squashed file system support (SQUASHFS) [N/y/?] (NEW)

FreeVxFS file system support (VERITAS VxFS(TM) compatible) (VXFS_FS) [N/y/?] (NEW)

Minix file system support (MINIX_FS) [N/y/?] (NEW)

SonicBlue Optimized MPEG File System support (OMFS_FS) [N/y/?] (NEW)

OS/2 HPFS file system support (HPFS_FS) [N/y/?] (NEW)

QNX4 file system support (read only) (QNX4FS_FS) [N/y/?] (NEW)

QNX6 file system support (read only) (QNX6FS_FS) [N/y/?] (NEW)

ROM file system support (ROMFS_FS) [N/y/?] (NEW)

Persistent store support (PSTORE) [N/y/?] (NEW)

System V/Xenix/V7/Coherent file system support (SYSV_FS) [N/y/?] (NEW)

UFS file system support (read only) (UFS_FS) [N/y/?] (NEW)

*

* Native language support

*

Native language support (NLS) [Y/?] (NEW) y

Default NLS Option (NLS_DEFAULT) [iso8859-1] (NEW)

Codepage 437 (United States, Canada) (NLS_CODEPAGE_437) [N/y/?] (NEW)

Codepage 737 (Greek) (NLS_CODEPAGE_737) [N/y/?] (NEW)

Codepage 775 (Baltic Rim) (NLS_CODEPAGE_775) [N/y/?] (NEW)

Codepage 850 (Europe) (NLS_CODEPAGE_850) [N/y/?] (NEW)

Codepage 852 (Central/Eastern Europe) (NLS_CODEPAGE_852) [N/y/?] (NEW)

Codepage 855 (Cyrillic) (NLS_CODEPAGE_855) [N/y/?] (NEW)

Codepage 857 (Turkish) (NLS_CODEPAGE_857) [N/y/?] (NEW)

Codepage 860 (Portuguese) (NLS_CODEPAGE_860) [N/y/?] (NEW)

Codepage 861 (Icelandic) (NLS_CODEPAGE_861) [N/y/?] (NEW)

Codepage 862 (Hebrew) (NLS_CODEPAGE_862) [N/y/?] (NEW)

Codepage 863 (Canadian French) (NLS_CODEPAGE_863) [N/y/?] (NEW)

Codepage 864 (Arabic) (NLS_CODEPAGE_864) [N/y/?] (NEW)

Codepage 865 (Norwegian, Danish) (NLS_CODEPAGE_865) [N/y/?] (NEW)

Codepage 866 (Cyrillic/Russian) (NLS_CODEPAGE_866) [N/y/?] (NEW)

Codepage 869 (Greek) (NLS_CODEPAGE_869) [N/y/?] (NEW)

Simplified Chinese charset (CP936, GB2312) (NLS_CODEPAGE_936) [N/y/?] (NEW)

Traditional Chinese charset (Big5) (NLS_CODEPAGE_950) [N/y/?] (NEW)

Japanese charsets (Shift-JIS, EUC-JP) (NLS_CODEPAGE_932) [N/y/?] (NEW)

Korean charset (CP949, EUC-KR) (NLS_CODEPAGE_949) [N/y/?] (NEW)

Thai charset (CP874, TIS-620) (NLS_CODEPAGE_874) [N/y/?] (NEW)

Hebrew charsets (ISO-8859-8, CP1255) (NLS_ISO8859_8) [N/y/?] (NEW)

Windows CP1250 (Slavic/Central European Languages) (NLS_CODEPAGE_1250) [N/y/?] (NEW)

Windows CP1251 (Bulgarian, Belarusian) (NLS_CODEPAGE_1251) [N/y/?] (NEW)

ASCII (United States) (NLS_ASCII) [N/y/?] (NEW)

NLS ISO 8859-1 (Latin 1; Western European Languages) (NLS_ISO8859_1) [N/y/?] (NEW)

NLS ISO 8859-2 (Latin 2; Slavic/Central European Languages) (NLS_ISO8859_2) [N/y/?] (NEW)

NLS ISO 8859-3 (Latin 3; Esperanto, Galician, Maltese, Turkish) (NLS_ISO8859_3) [N/y/?] (NEW)

NLS ISO 8859-4 (Latin 4; old Baltic charset) (NLS_ISO8859_4) [N/y/?] (NEW)

NLS ISO 8859-5 (Cyrillic) (NLS_ISO8859_5) [N/y/?] (NEW)

NLS ISO 8859-6 (Arabic) (NLS_ISO8859_6) [N/y/?] (NEW)

NLS ISO 8859-7 (Modern Greek) (NLS_ISO8859_7) [N/y/?] (NEW)

NLS ISO 8859-9 (Latin 5; Turkish) (NLS_ISO8859_9) [N/y/?] (NEW)

NLS ISO 8859-13 (Latin 7; Baltic) (NLS_ISO8859_13) [N/y/?] (NEW)

NLS ISO 8859-14 (Latin 8; Celtic) (NLS_ISO8859_14) [N/y/?] (NEW)

NLS ISO 8859-15 (Latin 9; Western European Languages with Euro) (NLS_ISO8859_15) [N/y/?] (NEW)

NLS KOI8-R (Russian) (NLS_KOI8_R) [N/y/?] (NEW)

NLS KOI8-U/RU (Ukrainian, Belarusian) (NLS_KOI8_U) [N/y/?] (NEW)

Codepage macroman (NLS_MAC_ROMAN) [N/y/?] (NEW)

Codepage macceltic (NLS_MAC_CELTIC) [N/y/?] (NEW)

Codepage maccenteuro (NLS_MAC_CENTEURO) [N/y/?] (NEW)

Codepage maccroatian (NLS_MAC_CROATIAN) [N/y/?] (NEW)

Codepage maccyrillic (NLS_MAC_CYRILLIC) [N/y/?] (NEW)

Codepage macgaelic (NLS_MAC_GAELIC) [N/y/?] (NEW)

Codepage macgreek (NLS_MAC_GREEK) [N/y/?] (NEW)

Codepage maciceland (NLS_MAC_ICELAND) [N/y/?] (NEW)

Codepage macinuit (NLS_MAC_INUIT) [N/y/?] (NEW)

Codepage macromanian (NLS_MAC_ROMANIAN) [N/y/?] (NEW)

Codepage macturkish (NLS_MAC_TURKISH) [N/y/?] (NEW)

NLS UTF-8 (NLS_UTF8) [N/y/?] (NEW)

*

* Virtualization

*

Virtualization (VIRTUALIZATION) [N/y/?] (NEW)

*

* Kernel hacking

*

*

* printk and dmesg options

*

Show timing information on printks (PRINTK_TIME) [N/y/?] (NEW)

Default console loglevel (1-15) (CONSOLE_LOGLEVEL_DEFAULT) [7] (NEW)

Default message log level (1-7) (MESSAGE_LOGLEVEL_DEFAULT) [4] (NEW)

*

* Compile-time checks and compiler options

*

Enable __deprecated logic (ENABLE_WARN_DEPRECATED) [Y/n/?] (NEW)

Enable __must_check logic (ENABLE_MUST_CHECK) [Y/n/?] (NEW)

Warn for stack frames larger than (needs gcc 4.4) (FRAME_WARN) [2048] (NEW)

Strip assembler-generated symbols during link (STRIP_ASM_SYMS) [N/y/?] (NEW)

Enable unused/obsolete exported symbols (UNUSED_SYMBOLS) [N/y/?] (NEW)

Debug Filesystem (DEBUG_FS) [N/y/?] (NEW)

Run 'make headers_check' when building vmlinux (HEADERS_CHECK) [N/y/?] (NEW)

Enable full Section mismatch analysis (DEBUG_SECTION_MISMATCH) [N/y/?] (NEW)

Make section mismatch errors non-fatal (SECTION_MISMATCH_WARN_ONLY) [Y/n/?] (NEW)

Compile the kernel with frame pointers (FRAME_POINTER) [Y/?] (NEW) y

Magic SysRq key (MAGIC_SYSRQ) [N/y/?] (NEW)

Kernel debugging (DEBUG_KERNEL) [N/y/?] (NEW)

*

* Memory Debugging

*

Extend memmap on extra space for more information on page (PAGE_EXTENSION) [N/y/?] (NEW)

Poison pages after freeing (PAGE_POISONING) [N/y/?] (NEW)

Testcase for the marking rodata read-only (DEBUG_RODATA_TEST) [N/y/?] (NEW)

SLUB debugging on by default (SLUB_DEBUG_ON) [N/y/?] (NEW)

Enable SLUB performance statistics (SLUB_STATS) [N/y/?] (NEW)

KASan: runtime memory debugger (KASAN) [N/y/?] (NEW)

Code coverage for fuzzing (KCOV) [N/y/?] (NEW)

*

* Debug Lockups and Hangs

*

Panic on Oops (PANIC_ON_OOPS) [N/y/?] (NEW)

panic timeout (PANIC_TIMEOUT) [0] (NEW)

Enable extra timekeeping sanity checking (DEBUG_TIMEKEEPING) [N/y/?] (NEW)

*

* Lock Debugging (spinlocks, mutexes, etc...)

*

Wait/wound mutex selftests (WW_MUTEX_SELFTEST) [N/y/?] (NEW)

Stack backtrace support (STACKTRACE) [N/y/?] (NEW)

Warn for all uses of unseeded randomness (WARN_ALL_UNSEEDED_RANDOM) [N/y/?] (NEW)

*

* RCU Debugging

*

RCU CPU stall timeout in seconds (RCU_CPU_STALL_TIMEOUT) [21] (NEW)

*

* Tracers

*

Tracers (FTRACE) [N/y/?] (NEW)

Enable debugging of DMA-API usage (DMA_API_DEBUG) [N/y/?] (NEW)

*

* Runtime Testing

*

Perform an atomic64_t self-test (ATOMIC64_SELFTEST) [N/y/?] (NEW)

Test functions located in the hexdump module at runtime (TEST_HEXDUMP) [N/y] (NEW)

Test functions located in the string_helpers module at runtime (TEST_STRING_HELPERS) [N/y] (NEW)

Test kstrto*() family of functions at runtime (TEST_KSTRTOX) [N/y] (NEW)

Test printf() family of functions at runtime (TEST_PRINTF) [N/y] (NEW)

Test bitmap_*() family of functions at runtime (TEST_BITMAP) [N/y/?] (NEW)

Test functions located in the uuid module at runtime (TEST_UUID) [N/y] (NEW)

Perform selftest on resizable hash table (TEST_RHASHTABLE) [N/y/?] (NEW)

Perform selftest on hash functions (TEST_HASH) [N/y/?] (NEW)

Test firmware loading via userspace interface (TEST_FIRMWARE) [N/y/?] (NEW)

sysctl test driver (TEST_SYSCTL) [N/y/?] (NEW)

udelay test driver (TEST_UDELAY) [N/y/?] (NEW)

Memtest (MEMTEST) [N/y/?] (NEW)

Trigger a BUG when data corruption is detected (BUG_ON_DATA_CORRUPTION) [N/y/?] (NEW)

*

* Sample kernel code

*

Sample kernel code (SAMPLES) [N/y/?] (NEW)

Undefined behaviour sanity checker (UBSAN) [N/y/?] (NEW)

Filter access to /dev/mem (STRICT_DEVMEM) [N/y/?] (NEW)

Write the current PID to the CONTEXTIDR register (PID_IN_CONTEXTIDR) [N/y/?] (NEW)

Randomize TEXT_OFFSET at build time (ARM64_RANDOMIZE_TEXT_OFFSET) [N/y/?] (NEW)

Warn on W+X mappings at boot (DEBUG_WX) [N/y/?] (NEW)

Align linker sections up to SECTION_SIZE (DEBUG_ALIGN_RODATA) [N/y/?] (NEW)

*

* CoreSight Tracing Support

*

CoreSight Tracing Support (CORESIGHT) [N/y/?] (NEW)

*

* Security options

*

Enable access key retention support (KEYS) [N/y/?] (NEW)

Restrict unprivileged access to the kernel syslog (SECURITY_DMESG_RESTRICT) [N/y/?] (NEW)

Enable different security models (SECURITY) [N/y/?] (NEW)

Enable the securityfs filesystem (SECURITYFS) [N/y/?] (NEW)

Harden memory copies between kernel and userspace (HARDENED_USERCOPY) [N/y/?] (NEW)

Harden common str/mem functions against buffer overflows (FORTIFY_SOURCE) [N/y/?] (NEW)

Force all usermode helper calls through a single binary (STATIC_USERMODEHELPER) [N/y/?] (NEW)

Default security module

> 1. Unix Discretionary Access Controls (DEFAULT_SECURITY_DAC) (NEW)

choice[1]: 1

*

* Cryptographic API

*

Cryptographic API (CRYPTO) [N/y/?] (NEW)

*

* Library routines

*

CRC-CCITT functions (CRC_CCITT) [N/y/?] (NEW)

CRC16 functions (CRC16) [N/y/?] (NEW)

CRC calculation for the T10 Data Integrity Field (CRC_T10DIF) [N/y/?] (NEW)

CRC ITU-T V.41 functions (CRC_ITU_T) [N/y/?] (NEW)

CRC32/CRC32c functions (CRC32) [Y/?] (NEW) y

CRC32 perform self test on init (CRC32_SELFTEST) [N/y/?] (NEW)

CRC32 implementation

> 1. Slice by 8 bytes (CRC32_SLICEBY8) (NEW)

2. Slice by 4 bytes (CRC32_SLICEBY4) (NEW)

3. Sarwate's Algorithm (one byte at a time) (CRC32_SARWATE) (NEW)

4. Classic Algorithm (one bit at a time) (CRC32_BIT) (NEW)

choice[1-4?]: CRC4 functions (CRC4) [N/y/?] (NEW)

CRC7 functions (CRC7) [N/y/?] (NEW)

CRC32c (Castagnoli, et al) Cyclic Redundancy-Check (LIBCRC32C) [N/y/?] (NEW)

CRC8 function (CRC8) [N/y/?] (NEW)

PRNG perform self test on init (RANDOM32_SELFTEST) [N/y/?] (NEW)

XZ decompression support (XZ_DEC) [N/y/?] (NEW)

CORDIC algorithm (CORDIC) [N/y/?] (NEW)

JEDEC DDR data (DDR) [N/y/?] (NEW)

IRQ polling library (IRQ_POLL) [N/y/?] (NEW)

Test string functions (STRING_SELFTEST) [N/y] (NEW)

#

# configuration written to .config

#

root@nems:/usr/src/linux-headers-4.14.26#

Wow, that’s a lot of output.

Now, a quick reboot and re-connect, and then check the running kernel…

nemsadmin@nems:/tmp/test1$ cat /proc/config.gz | gunzip | grep CONFIG_FSL_ERRATUM_A008585 # CONFIG_FSL_ERRATUM_A008585 is not set nemsadmin@nems:~$

Darn.

Strangely, the default config (my config) shows that my setting remains there…

nemsadmin@nems:/boot$ grep CONFIG_FSL_ERRATUM_A008585 /boot/config-4.14.26 CONFIG_FSL_ERRATUM_A008585=y

So maybe I just didn’t compile it correctly.

I wish someone in the threads would have posted how to actually add this. I mean, as a teacher, I try not to make assumptions. Saying to someone “set CONFIG_FSL_ERRATUM_A008585=y in your kernel” is a big assumption. Even I, the Bald Nerd, am not quite sure how to do that, yet I’m sure the people who make the statements are. Let’s instead post the actual commands or at least point the person in the right direction.

I’ll try to figure it out in the next couple days, and will post my step-by-step. I’ve posted a cry for help in the forum thread related to the Debian image I’m using. Hopefully they can point me in the right direction.

UPDATE: Ohmigosh, it just hit me… this is using make, yet I didn’t install!

Let’s try it, just for good measure:

root@nems:/usr/src# cd linux-headers-4.14.26/ root@nems:/usr/src/linux-headers-4.14.26# make install scripts/kconfig/conf --silentoldconfig Kconfig /bin/bash ./arch/arm64/boot/install.sh 4.14.26 \ arch/arm64/boot/Image System.map "/boot" /bin/bash: ./arch/arm64/boot/install.sh: No such file or directory arch/arm64/boot/Makefile:40: recipe for target 'install' failed make[1]: *** [install] Error 127 arch/arm64/Makefile:130: recipe for target 'install' failed make: *** [install] Error 2

Grr….

root@nems:/usr/src/linux-headers-4.14.26# ls arch/arm64/boot/ dts Makefile

Yeah, there’s no install.sh there.

I’m sure it’s something simple I’m missing since I’m not experienced at this kind of thing.

More to come!